Notice

Recent Posts

Recent Comments

Link

| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | ||||||

| 2 | 3 | 4 | 5 | 6 | 7 | 8 |

| 9 | 10 | 11 | 12 | 13 | 14 | 15 |

| 16 | 17 | 18 | 19 | 20 | 21 | 22 |

| 23 | 24 | 25 | 26 | 27 | 28 |

Tags

- Crawling

- 데이터분석전문가

- 볼린저밴드

- SQL

- Quant

- 비트코인

- backtest

- hackerrank

- 코딩테스트

- 토익스피킹

- Python

- Programmers

- lstm

- 파트5

- randomforest

- TimeSeries

- ADP

- 데이터분석

- 백테스트

- 파이썬 주식

- PolynomialFeatures

- 주식

- sarima

- 빅데이터분석기사

- 변동성돌파전략

- 파이썬

- docker

- 프로그래머스

- GridSearchCV

- 실기

Archives

- Today

- Total

데이터 공부를 기록하는 공간

[QUANT] multi LSTM - ets,coal,gas 본문

유럽탄소배출권, 석탄가격(뉴캐슬), 가스가격(H/H) 3가지 feature로

Multivariate LSTM을 수행해보겠다.

1. Library Impoert

import warnings

warnings.filterwarnings('ignore')

from pathlib import Path

import numpy as np

import pandas as pd

from sklearn.metrics import mean_absolute_error

from sklearn.preprocessing import minmax_scale

import tensorflow as tf

from tensorflow.keras.callbacks import ModelCheckpoint, EarlyStopping

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, LSTM

import matplotlib.pyplot as plt

import seaborn as sns

sns.set_style("whitegrid")

np.random.seed(42)2. Data Load and Preprocessing

ets = pd.read_csv('/content/drive/MyDrive/EU ETS Future.csv', parse_dates=True)

gas = pd.read_csv('/content/drive/MyDrive/Natural Gas Futures Historical Data.csv', parse_dates=True)

coal = pd.read_csv('/content/drive/MyDrive/Newcastle Coal Futures Historical Data.csv', parse_dates=True)인베스팅 닷컴에서 다운받은 데이터 불러오기

dfs = [ets, gas, coal]

columns = ['date','close','open','high','low','volume','return']

for df in dfs:

df.columns = columnsdataframe의 colume을 변경해줍니다.

from datetime import datetime

def strp(x):

if x[0].isnumeric() == True:

answer = datetime.strptime(x, "%Y년 %m월 %d일")

else:

answer = datetime.strptime(x, "%b %d, %Y")

return answer

# 날짜변환

ets['date'] = ets['date'].apply(lambda x:strp(x))

gas['date'] = gas['date'].apply(lambda x:strp(x))

coal['date'] = coal['date'].apply(lambda x:strp(x))

# 병합전

ets = ets.rename(columns={"close":"ets"})[['date','ets']]

gas = gas.rename(columns={"close":"gas"})[['date','gas']]

coal = coal.rename(columns={"close":"coal"})[['date','coal']]

# 병합

df = pd.merge(left = ets, right=gas, on='date')

df = pd.merge(left = df, right=coal, on='date')

df = df.sort_values(by='date',ascending=True).set_index('date')

data = df.copy()

data'date'가 object로 이를 datetime으로 변경하고 병합해줍니다 .

fig, axes = plt.subplots(ncols=3, figsize=(20,6))

axes[0].plot(df)

axes[1].plot(np.log1p(df))

axes[2].plot(df.diff())

axes[0].set_title('original')

axes[1].set_title("log 1p")

axes[2].set_title("diff")

for ax in axes:

ax.legend(['ets','gas','coal'])

데이터를 살펴봅니다.

original과 diff에서는 coal이 변동이 다른 데이터에 비해 큽니다.

from sklearn.preprocessing import MinMaxScaler

mm = MinMaxScaler()

data_scaled = mm.fit_transform(data)

data_scaled = pd.DataFrame(data_scaled, index=data.index, columns= ['ets','gas','coal'])

data_scaled

MinMaxScaling해줍니다.

print(mm.data_max_, mm.data_min_)

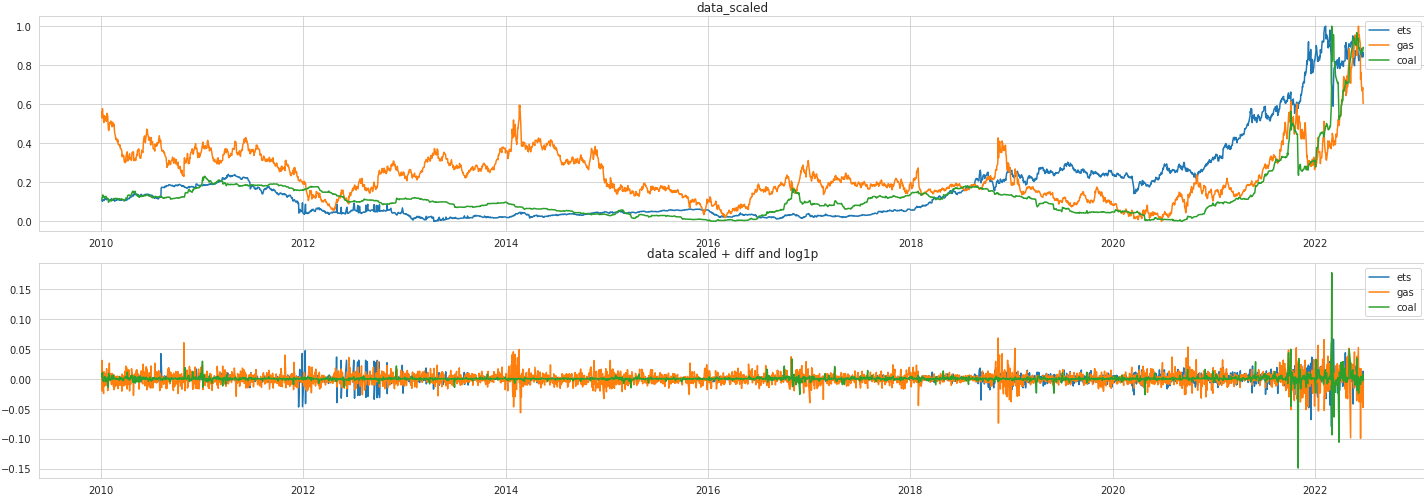

fig, axes = plt.subplots(nrows=2, figsize=(20,7))

fig.tight_layout()

axes[0].plot(data_scaled)

axes[0].set_title("data_scaled")

axes[0].legend(['ets','gas','coal'])

axes[1].plot(pd.DataFrame(data_scaled).apply(np.log1p).diff())

axes[1].set_title("data scaled + diff and log1p")

axes[1].legend(['ets','gas','coal'])

3. Split Data

def create_multivariate_data(data, window_size):

y = data[window_size:]

n = data.shape[0]

X = np.stack([data[i: j]

for i, j in enumerate(range(window_size, n))], axis=0)

return X, y

# 데이터 범위 지정

temp = data_scaled.loc['2020-07-01':] #2019년 데이터부터

# window크기 지정

window_size=10

X, y = create_multivariate_data(temp, window_size=window_size)

print(X.shape, y.shape)

# test size 결정

size = 0.1

test_size=int(X.shape[0]*size)

train_size = X.shape[0]-test_size

print(train_size, test_size)

X_train, y_train = X[:train_size], y[:train_size]

X_test, y_test = X[train_size:], y[train_size:]

print(X_train.shape, y_train.shape, X_test.shape, y_test.shape)

# validation size 결정

size = 0.35

valid_size = int(X_train.shape[0]*size)

train2_size = X_train.shape[0]-valid_size

print(train2_size, valid_size, test_size)

X_train2, y_train2 = X_train[:train2_size], y_train[:train2_size]

X_valid, y_valid = X_train[train2_size:], y_train[train2_size:]

print(X_train2.shape, X_valid.shape, X_test.shape)

4. LSTM Modeling

n_features = 3 # ets, gas, coal

output_size = 3

model = Sequential([

LSTM(units=64,

dropout=0.3,

recurrent_dropout=0.1,

input_shape=(window_size, n_features),

return_sequences=False),

'''

LSTM(units=128,

dropout=0.3,

recurrent_dropout=0.1,

return_sequences=True),

LSTM(units=64,

dropout=0.3,

recurrent_dropout=0.1,

return_sequences=False),

'''

Dense(32),

Dense(output_size)

])

model.summary()

from tensorflow.keras.optimizers import Adam

optimizer = Adam(lr=0.0005)

model.compile(loss='mse', optimizer=optimizer)

checkpointer = ModelCheckpoint(filepath=lstm_path,

verbose=1,

monitor='val_loss',

mode='min',

save_best_only=True)

early_stopping = EarlyStopping(monitor='val_loss',

patience=10,

restore_best_weights=True)

result = model.fit(X_train2,

y_train2,

epochs=100,

batch_size=20,

shuffle=False,

validation_data=(X_valid, y_valid),

callbacks=[early_stopping, checkpointer],

verbose=1)5. Prediction

y_pred_train2 = pd.DataFrame(rnn.predict(X_train2),

columns=['ets','gas','coal'],

index=y_train2.index)

y_pred_train2.info()

y_pred_valid = pd.DataFrame(rnn.predict(X_valid),

columns=['ets','gas','coal'],

index=y_valid.index)

y_pred_valid.info()

y_pred_test = pd.DataFrame(rnn.predict(X_test),

columns=['ets','gas','coal'],

index=y_test.index)

y_pred_test.info()

6. Result Visualization

y_train2_ = y_train2*(mm.data_max_-mm.data_min_)+mm.data_min_

y_valid_ = y_valid*(mm.data_max_-mm.data_min_)+mm.data_min_

y_test_ = y_test*(mm.data_max_-mm.data_min_)+mm.data_min_

y_pred_train2_ = pd.DataFrame(y_pred_train2, index=y_train2.index, columns=y_train2.columns)*(mm.data_max_-mm.data_min_)+mm.data_min_

y_pred_valid_ = pd.DataFrame(y_pred_valid, index=y_valid.index, columns=y_valid.columns)*(mm.data_max_-mm.data_min_)+mm.data_min_

y_pred_test_ = pd.DataFrame(y_pred_test, index=y_test.index, columns=y_test.columns)*(mm.data_max_-mm.data_min_)+mm.data_min_fig, axes = plt.subplots(ncols=3, figsize=(17,4))

col_dict = {"ets":"ETS","gas":"GAS","coal":"COAL"}

for i, col in enumerate(y_test.columns, 0):

y_train2_.loc[:,col].plot(ax=axes[i], label='train', title=col_dict[col])

y_valid_[col].plot(ax=axes[i], label='valid')

y_test_[col].plot(ax=axes[i], label='test')

y_pred_train2_[col].plot(ax=axes[i], label='pred_train2')

y_pred_valid_[col].plot(ax=axes[i], label='pred_valid')

y_pred_test_[col].plot(ax=axes[i], label='pred_test')

axes[i].set_xlabel('')

axes[i].legend()

fig, axes = plt.subplots(ncols=3, figsize=(17,4))

col_dict ={"ets":"ETS","gas":"GAS","coal":"COAL"}

for i, col in enumerate(y_test.columns, 0):

y_train2_.loc[:,col].plot(ax=axes[i], label='true_train', title=col_dict[col])

y_pred_train2_[col].plot(ax=axes[i], label='pred_train2')

axes[i].set_xlabel('')

axes[i].legend()

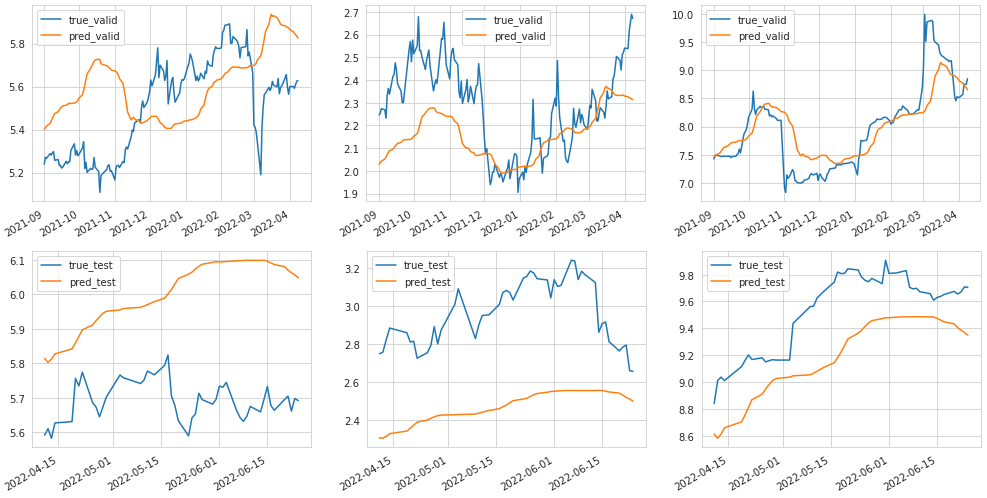

fig, axes = plt.subplots(ncols=3, figsize=(17,4))

col_dict ={"ets":"ETS","gas":"GAS","coal":"COAL"}

for i, col in enumerate(y_test.columns, 0):

y_valid_[col].plot(ax=axes[i], label='true_valid')

y_pred_valid_[col].plot(ax=axes[i], label='pred_valid')

axes[i].set_xlabel('')

axes[i].legend()

fig, axes = plt.subplots(ncols=3, figsize=(17,4))

col_dict ={"ets":"ETS","gas":"GAS","coal":"COAL"}

for i, col in enumerate(y_test.columns, 0):

y_test_[col].plot(ax=axes[i], label='true_test')

y_pred_test_[col].plot(ax=axes[i], label='pred_test')

axes[i].set_xlabel('')

axes[i].legend()

좌에서 우로, ETS GAS COAL

위에서 아래로 전체 / TRAIN / VALID / TEST

✔ 데이터 구간을 정해준 후 minmaxscaling을 하면 더 결과가 더 괜찮게 나온다.

7. Result : data = log1p(df)

ETS VALID 데이터는 방향성이 아예 틀렸다.

8. Result : MinMaxScaling을 데이터 구간을 변경한후 지정했을 때

MinMaxScaling을 2020-07-01~ 범위부터 수행했을 때 훨씬 괜찮은 결과가 나온다.

Scaling을 하는 범위를 신경을 많이 쓰자.

'STOCK > QUANT' 카테고리의 다른 글

| [QUANT] Auto Encoder (0) | 2022.06.25 |

|---|---|

| [QUANT] Asset Allocation (0) | 2022.06.12 |

| [QUANT] 백테스트 - VAA 전략 (0) | 2022.06.12 |

| [QUANT] INVESTING.COM (0) | 2022.06.12 |

| [QUANT] 멀티팩터모델을 활용한 시장국면 진단 (0) | 2022.06.10 |

Comments